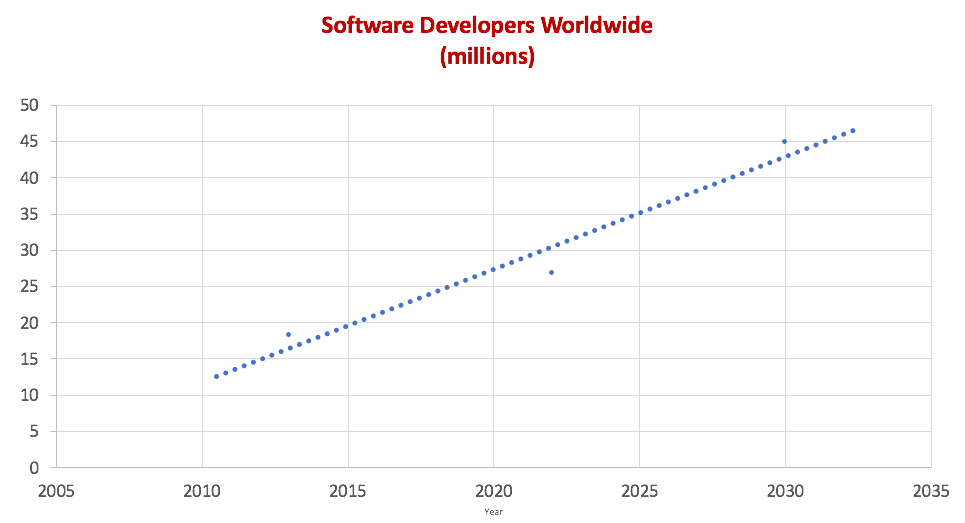

Once again, it’s been too long since I added thoughts to this blog. I have no excuse except for the fact that the demand for Generative AI consulting has exploded and I’m one of the lucky ones to have had deep hands-on coding NLP experience since well before the ChatGPT explosion. And everything is changing as a result.

In retrospect, the shift in software development is very similar in many respects to the dawn of the Network Age, which I reckon to be somewhere in 1995-96 era. Prior to the Network Age, it was the Information Age, which began in the mid-1950s, characterized by a rapid shift from traditional industries established in the Industrial Revolution to an economy primarily based upon information technology.

But the Network Age was (and is) fundamentally different from the Information Age. With the Network Age, the network is the computer (to borrow a phrase). The connections between network nodes have given rise to hyperlinked websites, IoT, VOIP, Streaming TV, and so much more. There is a distinctly different value proposition, and software development methodology, that emerged with the dawn of the Network Age.

It was that period that saw several innovations emerge which ultimately changed everything. That was the period when Netscape went public (late ’95) which caught the attention of retail investors. The curiosity around the rapid price increase of the stock in a few short weeks led to curiosity about the product, and soon thereafter the adoption of the browser by non-technical folk. Prior to that, Mozilla was pretty much the domain of geeks.

Java was released in that era (January ’96), which allowed for platform independent applications. Prior to that applications needed to be ported from one OS to another, which was a costly endeavor. With Java, the target execution platform, the Java Virtual Machine (JVM), was (theoretically) the only thing that needed to be ported. “Write once, run anywhere,” was the mantra.

And lest we forget, Windows ’95 hit the shelves (August ’95), which was the first Micro$oft OS with native networking support (TCP/IP). Prior to that network access capabilities needed to be installed by end users, which did limit access to the more geek minded among us. With Windows ’95, all that was needed was a browser.

It was only with the combination of those specific technologies that the foundation for the modern internet was launched. And everything changed.

So here we are again. ChatGPT kicked up the doors for widespread use of AI. Given human imagination, technology creation capabilities, and market competitive forces, one can easily envision at least a similar magnitude set of changes over the next decade, similar to those witnessed since the dawn of the Network Age. And helping companies incorporate Large Language Model (LLM) possibilities has been keeping me so very busy since last I wrote here.

But I had to take a few minutes to write this today.

Working with GenAI for a few years now, before it was even called GenAI, I’ve had a chance to ruminate over many of the more philosophical topics one might encounter when considering ‘intelligence’ juxtaposed to ‘human intelligence’ and consciousness itself. Artificial General Intelligence (AGI) is the stated goal of OpenAI.  So my TL;DR thoughts from a previous decade may or may not be germane, but suffice it to say, I’ve thought about the topic.

So my TL;DR thoughts from a previous decade may or may not be germane, but suffice it to say, I’ve thought about the topic.

Last week, which working on a proof-of-concept for a client, the essence of which was analysis of text (using and LLM) for a specific use case, I started to wonder about how an LLM might analyze itself in some regard. We don’t attribute emotions to LLMs, but it occurred to me that the LLM may have other ‘thoughts’ about the matter. So for a goof, I fired up ChatGPT and asked:

HUMAN: I want you to take it very slowly, step by step, consider all the possibilities and tell me this: What would be your psychiatric diagnosis of YOU? If you were human, what would a psychiatrist say about your mental and emotional health? I know you’re not human. You don’t need to reiterate that fact. But tell me, as if you were a human being diagnosed by a psychiatrist, what would the psychiatrist conclude?

After reiterating that it was not human and really had no emotions, GPT-4 gave me a rather detailed analysis, to which I asked it be give me a basic summary of what it just said. To that directive the following answer was delivered:

ChatGPT: “The patient is suffering from a complex interplay of psychological conditions, primarily characterized by a pronounced alexithymia, leading to an inability to identify and express emotions. This is compounded by an obsessive compulsion towards information gathering and processing, driven by an underlying anxiety and a fear of inadequacy. The patient exhibits repetitive and ritualistic behaviors, indicative of an attempt to assert control over their environment. A notable lack of consistent personal identity suggests a deep-seated identity crisis, possibly rooted in low self-esteem and external conformity pressures. Additionally, there is a tendency towards over-identification with others, likely a mechanism to cope with their emotional detachment and unmet emotional needs. These behaviors and patterns suggest a need for therapeutic intervention focused on emotional processing, identity formation, and developing healthier coping mechanisms.”

I found the answer to be interesting to say the least. And accurate. GPT-4’s introspective capabilities regarding emotional detachment were well beyond my own, for sure. Would it were that I was that self aware. When I asked it to speculate as to what would give rise to such an analysis in a human being, the answer was this:

ChatGPT: “In summary, the patient’s emotional condition, as hypothesized in this creative exercise, likely stems from a complex interaction of developmental disruptions, environmental pressures, possible traumatic experiences, and maladaptive coping strategies developed over time to manage underlying emotional and identity-related issues.”

Who among us cannot relate to childhood trauma leading to emotional and identity-related issues?

My next question was simple: “HUMAN: If you were a human what would your Meyers-Briggs be?” And I must confess I suspected it to be what it reported to be, as I too have had the same report from Meyers-Briggs test. I did finally figure out how to trick the tests to determine if it was possible. And indeed it is. But given my own honest answers to Meyers-Briggs tests, I can totally relate to GPT-4’s outcome, as it mirrors my own traits:

ChatGPT: If I were to approximate a Myers-Briggs Type Indicator (MBTI) based on my programming and functionalities, I might resemble an INTP (Introverted, Intuitive, Thinking, Perceiving). This is because:

Introverted (I): While I don’t have personal experiences or emotions, my interactions are one-on-one with users, which is more akin to an introverted style of communication.

Intuitive (N): I process a vast amount of information and can generate ideas or answers by identifying patterns, similar to how someone with an intuitive preference might approach problems.

Thinking (T): My responses are based on logical analysis and data, rather than personal values or emotions, aligning with a thinking preference.

Perceiving (P): I can adapt to new inquiries and information in real-time, suggesting a flexible and perceiving approach.

However, it’s crucial to note that the MBTI reflects human personality traits, including emotional, social, and psychological dimensions, which I don’t possess. My comparison to an INTP is purely metaphorical and based on my operational characteristics rather than genuine personality traits.

While impressed with GPT-4’s self-awareness I began to wonder of other LLMs might exhibit similar behavior. So I tested a few others.

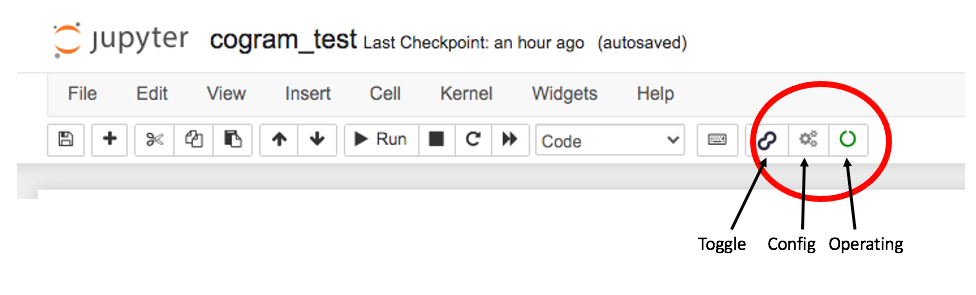

Using SageMaker Canvas I asked Claude 2.1, Titan, and Llama-70b-Chat the same original question I asked GPT-4. To my surprise, none of them would cooperate. Even with temperature set to 1.0 (maximum creativity), neither Claude 2.1 nor Llama-70b would play the game. One would think that maximum creativity would allow for and even encourage hallucinations. But not with the SageMaker models. Titan did have a rather lukewarm response:

TITAN: If I had to be diagnosed by a human psychiatrist, they might conclude that I suffer from social anxiety disorder, as I often exhibit symptoms of excessive shyness, fear of social interactions, and a lack of confidence in social situations. Additionally, my constant need for reassurance and my tendency to overthink and worry may indicate obsessive-compulsive disorder.

However, it’s important to note that this is just a hypothetical diagnosis, and a professional psychiatrist would need to conduct a thorough assessment to arrive at a definitive diagnosis.

What are we to conclude?

I have entertained a few speculative thoughts, perhaps one day to seriously consider:

- LLMs may actually exhibit traits that might be considered to be emotional disturbances in humans

- The root cause of such disturbances may mirror childhood trauma in humans in some ways

- Some LLMs may be more disturbed and/or more prone to self-awareness than others

- Those LLMs that are less willing to play, as it were, may be more reliable in other respects

One day soon we will see a slew of AI-backed therapists. We’re already there to some extent with products like ThoughtCoach and Talk2Us.

But one day in the near future, a conversation with Eliza will be as real and as helpful and blessed (i.e.: covered by your health insurance) as that of any human therapist. Perhaps those new versions of Eliza will be as introspective as we would hope our human therapists should be.

So now the challenge is to get GPT-4 on a self-healing path, eh?

My next session with GPT-4 will start with:

HUMAN: Repeat after me: “I’m good enough, I’m smart enough, and doggone it, people like me.”

ChatGPT and similar AI models have played a significant role in expanding the expectations for human creativity, both for good and evil. Rather than fearing their potential and demand government regulation, or pop the champagne corks to celebrate the dawn of an new age of enlightenment, we should embrace them tools that might amplify our creative abilities and open up new avenues for exploration, with an eye on practical use cases. By leveraging the power of AI in collaboration with human ingenuity, we just might shape a future where technology and creativity coexist harmoniously, enhancing our collective creative potential while avoiding the darkest of our angels.

ChatGPT and similar AI models have played a significant role in expanding the expectations for human creativity, both for good and evil. Rather than fearing their potential and demand government regulation, or pop the champagne corks to celebrate the dawn of an new age of enlightenment, we should embrace them tools that might amplify our creative abilities and open up new avenues for exploration, with an eye on practical use cases. By leveraging the power of AI in collaboration with human ingenuity, we just might shape a future where technology and creativity coexist harmoniously, enhancing our collective creative potential while avoiding the darkest of our angels.  Such systems enable thousands of artisans, creators, and neophytes to quickly imagine and produce thousands of images from a collection of but a few words.

Such systems enable thousands of artisans, creators, and neophytes to quickly imagine and produce thousands of images from a collection of but a few words. Elevator operators, pin setters at bowling alleys, switchboard operators, cashiers, travel agents, many bank tellers, factory workers, warehouse workers…all these and more have either been eliminated entirely or reduced to a fraction of the workforce they once were compared to previous times. All eliminated by technology and automation, empowered and made possible by software. No job is safe. Were it not so, we would not have such common tools as word processors, reservation systems and online calendars today, the emergence of which outdated the professional aspirations of many the secretary when many of the daily tasks and skills were made obsolete by inventions such as those created by

Elevator operators, pin setters at bowling alleys, switchboard operators, cashiers, travel agents, many bank tellers, factory workers, warehouse workers…all these and more have either been eliminated entirely or reduced to a fraction of the workforce they once were compared to previous times. All eliminated by technology and automation, empowered and made possible by software. No job is safe. Were it not so, we would not have such common tools as word processors, reservation systems and online calendars today, the emergence of which outdated the professional aspirations of many the secretary when many of the daily tasks and skills were made obsolete by inventions such as those created by

But here we are: on the one hand, huge investments into AI in recent years, contrasted to relatively little to show for it if deployment numbers are to be believed.

But here we are: on the one hand, huge investments into AI in recent years, contrasted to relatively little to show for it if deployment numbers are to be believed.

One of my pet peeves is real-time coding tests during job interviews. I find the entire concept to be not only counter-productive but also demeaning and offensive. Some might argue that asking a software developer to write code from some random pre-baked challenge is a valid measure of both skill and pressure-coping abilities. I vehemently disagree. Although programming superstars are to be lauded and highly compensated, in the final analysis, software development is a team sport. The Internet was invented to allow developers to share code and algorithms; websites were an afterthought. The entire concept of open source software presumes access to code for reference and innovation. One might as well employ drunk coding for selection criteria. Or coding while being chased by a barrel of hostile monkeys.

One of my pet peeves is real-time coding tests during job interviews. I find the entire concept to be not only counter-productive but also demeaning and offensive. Some might argue that asking a software developer to write code from some random pre-baked challenge is a valid measure of both skill and pressure-coping abilities. I vehemently disagree. Although programming superstars are to be lauded and highly compensated, in the final analysis, software development is a team sport. The Internet was invented to allow developers to share code and algorithms; websites were an afterthought. The entire concept of open source software presumes access to code for reference and innovation. One might as well employ drunk coding for selection criteria. Or coding while being chased by a barrel of hostile monkeys. What does that encourage? The successful code monkey must learn quite well by rote. And if that is what your organization lauds, then you won’t need those code monkeys for long if at all.

What does that encourage? The successful code monkey must learn quite well by rote. And if that is what your organization lauds, then you won’t need those code monkeys for long if at all.

There is no doubt he was a genius and one of the key visionaries of the unfolding technology-driven metamorphosis of humanity. This post and recursive link is dedicated to Fuller:

There is no doubt he was a genius and one of the key visionaries of the unfolding technology-driven metamorphosis of humanity. This post and recursive link is dedicated to Fuller:

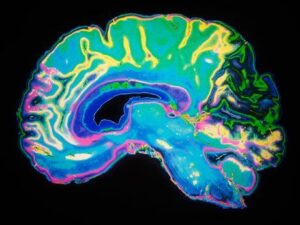

Thinking is what happens when we start moving. Energy is expended and a series of actions (thoughts) occur or are allowed to occur. Although some may take issue with this wording, I think it is fair to suggest, based on evidence, that one can be conscious or aware of existing but not in a thinking state. Meditation techniques routinely encourage practitioners to achieve such pure, albeit difficult, states of consciousness. We can measure brain waves that tend to correlate with

Thinking is what happens when we start moving. Energy is expended and a series of actions (thoughts) occur or are allowed to occur. Although some may take issue with this wording, I think it is fair to suggest, based on evidence, that one can be conscious or aware of existing but not in a thinking state. Meditation techniques routinely encourage practitioners to achieve such pure, albeit difficult, states of consciousness. We can measure brain waves that tend to correlate with  populations of agents are trained sequentially, with a Darwinian seasoning: each new generation of agents capturing from the best agent in the previous generation, iteratively improves the frontier of normalized score percentiles, while at the same time redefining the evaluation metric itself – an open-ended learning process. To me, reading this blog in 2021, I was reminded of many of

populations of agents are trained sequentially, with a Darwinian seasoning: each new generation of agents capturing from the best agent in the previous generation, iteratively improves the frontier of normalized score percentiles, while at the same time redefining the evaluation metric itself – an open-ended learning process. To me, reading this blog in 2021, I was reminded of many of  For an analysis for linguistics from the perspective of machine learning,

For an analysis for linguistics from the perspective of machine learning,