The Temerity of a TPU

I attended a live broadcast of a recent Google I/O Conference here in Utah — akin to a Super Bowl Party for geeks — and I came to a minor epiphany. My hope in going was to learn a bit about Google’s Cloud offerings in order to better compare Google’s Cloud choices with AWS. But not much in that vein was covered, at least not at the sessions televised to the Xactware meeting rooms in Lehi. Evidently the crowd gathered there had more of an Android developer penchant, and there’s nothing wrong with that. But my choices for sessions of interest were in the minority. And with limited rooms to assemble, most of the sessions I had hoped hear were not live in Lehi. Luckily, most of it was captured by our friends at Google and posted within hours of the conference.

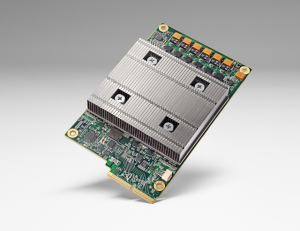

One thing, however, that did grab my attention at one of the live sessions and has had me ruminating for a couple of weeks since is the mention of their TPU in one of the keynotes: The Tensor Processing Unit (TPU).

In a recent discussion with my friend Wil Bown, AI/VP developer extraordinaire, he mentioned the huge performance boost BitCoin mining efforts have achieved from burning the essential code into ASICS, boosting performance well beyond FPGAs, which had previously trumped GPU banks, which themselves only a year or so ago were the cutting edge for coin miners.

So ASICS. Yeah, that does makes sense. In some instances, as layers of software infrastructure stratify, burning the full application stack to a chip will yield that final incremental competitive advantage. In fact, we could assert in general terms that entire industries have been built on that principle. For example, before there was Cisco, there was software, running on vanilla UNIX systems which functioned as switches and routers. Most often the higher costs of ASICS production limit their adoption to military applications, or aerospace, or manufacturing processes, though sometimes a more common use case emerges — like Cisco — that can utterly change everything.

But to learn that Google assembled a raised floor environment consisting of racks TPU laden servers, which was a critical factor in their Go Champion win earlier this year, gave me pause. TensorFlow became open source endeavor just a few months ago. In my mind, open source is the beginning of the journey, and certainly not the end. To therefore commit the application as such in silico seems quite premature if not remarkably misguided. It’s too early.

Machine Pareidolia

Pareidolia is is a psychological phenomenon involving a stimulus (an image or a sound) wherein the human mind perceives a familiar pattern of something where none actually exists. The face on Mars, or the Messiah in a piece of toast, for example. We humans see all sorts of patterns in the random distributions around us. Every named constellation is a pareidoliac construct. We seem to be hard wired to see faces — in clouds, trees, rocks, potatoes…it’s rather common. But we’re also aware that the faces we see in clouds are not really faces. They’re clouds. Machines, on the other hand, might not be tuned as well.

Consider the school bus and the ham sandwich.

Clearly we cannot place deep confidence in Deep Neural Network implementations like TensorFlow just yet. But the fact is, we already have: Google has already baked it in and raised the floor, as it were. Therein lies the temerity.

The hacking possibilities are legion. If I know I can fool the image processing systems with cleverly crafted images, any security system relying on a DNN component is vulnerable. Any digital visual processing function — from facial recognition to autonomous vehicle piloting — can therefore be rather easily compromised. Any visual processing application can be hacked.

A Different Kind of Turing Test

In addition to my misgivings about the Google announcement, another question comes to mind as I ponder the TPU and the adjacent possible it may now entail: Will DNN systems, trained independently from different data sets, draw similar pareidoliac conclusions?

In other words, just as two people might see a face in the same picture of a cloud, might two systems see the same school bus in the image of a ham sandwich? If so, is that perhaps another test for ‘intelligence’ albeit not human? Might a different kind of Turing Test then emerge to measure the consciousness of our digital offspring?

The Temerity

I applaud bold advancements in technology. I admire competitive juices. But I’m just a little concerned we may now be at a very critical juncture in spacetime; a time in which the rate of innovation finally and fully exceeds the ability of the fitscape to adequately test it.

When the rate of innovation exceeds the ability of the fitness landscape to adequately test the innovation for fitness, bad stuff will result — that is my fear. The temerity of the TPU may be a step too soon, or too far, or both.