Let Us Reason Together – part 1

“‘Come now, and let us reason together,’

Says the Lord,

‘Though your sins are like scarlet,

They shall be as white as snow;

Though they are red like crimson,

They shall be as wool’” (Isaiah 1:18)

Ten years ago I made the decision to fully restore my hands-on coding skills, spend more of my cycles on learning and coding and less on management, consulting, and management consulting. It was one of the best career decisions I ever made.

During most of that decade my focus has been on all things AI, the most recent three years of which have been devoted to Natural Language Processing (NLP) on behalf of two different firms — one very large and one very small. Prior to those engagements my pursuit of data science skills and machine learning knowledge was more general.

For the sake of this collection of blog entries, please consider the thesis of Thinking, Fast and Slow; we humans use two complimentary systems of thought:

– FAST, which operates automatically. It is involuntary, intuitive, effortless

– SLOW, which solves problems, reasons, computes, concentrates and gathers evidence

Andrew NG, one of the pioneers of modern AI, is famously quoted as saying,

“If a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future.” — Andrew Ng

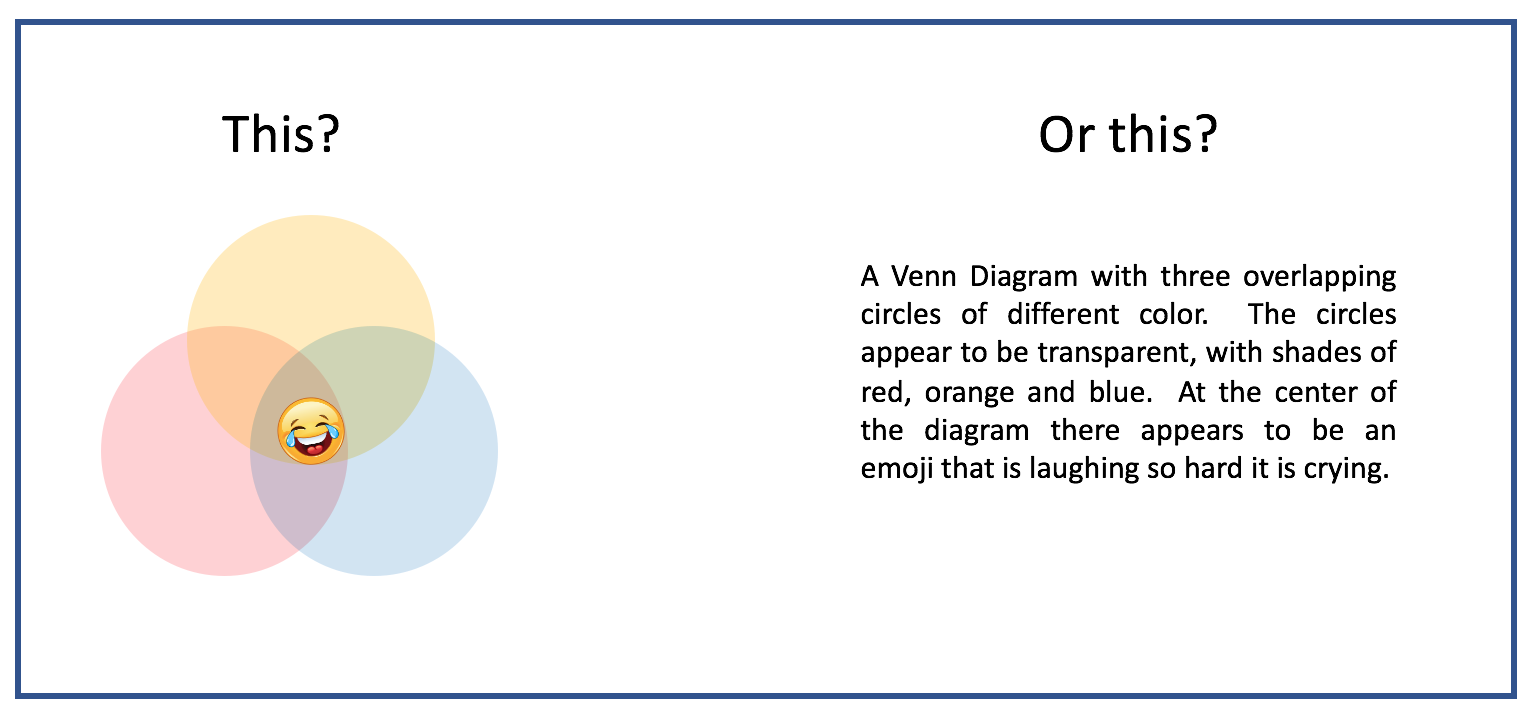

If Ng is correct, then the probability of creating a Machine Learning model to meaningfully process (i.e.: extract meaning from) the image in the example above is far greater than the probability of finding similar utility with an NLP model to process the associated text.

As the adage goes, “A picture is worth a thousand word.” But those words do not adequately convey the inverse of the relationship. How many words does it take to paint a picture? And how do we process those words with a machine? More importantly, what do those words mean?

Why is NLP so hard?

Recent advances in NLP have made headlines, to be sure. From BERT to ERNIE to GPT-3 and all the variants in between, the promise of Turing Test worthy models seems to be just around the proverbial corner. But we are still not there. Not even with 500 billion tokens, 96 layers and at a cost of $12 million for a single training run.

In my view, when it comes to Machine Learning and Artificial Intelligence, NLP is far more difficult for machines to get right than any other facet of AI, and it has very much to do with the three characteristics listed below and the fact the when humans process words, whether written or spoken, both FAST and SLOW thinking are involved. And more.

My focus on NLP over the past three years has led me to a few general assertions regarding human language, which I now share:

-

1. Human language has infinite possibilities. Regardless of the language or vocabulary, an infinite number of sentences are possible.

- Machine Translation: Frequently used NLP applications, machine translation enables automatic translation from one language to another (eg: English to Spanish) with no need for human involvement.

- Social Media Monitoring: Controversial at best, increasingly used to detect and censor viewpoints that may not conform to ‘factual’ analysis.

- Sentiment Analysis: Helping businesses detect negative reviews of products and service.

- Chatbots and Virtual Assistants: Automate question/answer as much a possible to make human customer assistance much more productive.

- Text analysis: Name/Entity Recognition (NER) is one example of text analysis use cases.

- Speech Recognition: Alexa, Siri, Google, Cortana and others.

- Text Extraction: Search, but smarter.

- Autocorrect: Spell Check and more

2. Text communication alone is multidimensional and can be inherently ambiguous.

3. Human communication is comprised of far more than words.

Evidence supporting the assertions above are rather trivial to gather so I make no effort here to prove or validate the above. Please take issue with these as you see fit. I would be pleased to learn if and why any of these assertions are somehow invalid. Assertion #1 above may be debatable from a computability perspective, but for the sake of these blog entries, let’s just go with it and not get side-tracked.

So…NLP is hard. Really hard. That’s not to say we can’t make progress with use-case specific advances. Leaving the Turing Test out, what can we do? And how can we improve? This series of blog entries is devoted to the exploration of hybrid approaches to solving some of the more difficult problems machine learning enthusiasts face when processing human language. Today a handful of NLP use cases are business worthy. Application of NLP can be found in a range of business contexts, including e-commerce, healthcare and advertising. Today, the following are the top NLP use cases in business:

These general buckets of NLP use cases have seen NLP become baked-in components for a growing list of applications and services. While the utility of these functions grows daily, so does frustration levels (who among us has not wanted to throw a smart phone against the wall when speaking with an impersonal, clumsy chat bot?).

It is clear that NLP in practice is here to stay. But even with (unwarranted) Turing-Test-passing declarations made by otherwise intelligent humans for innovations such as Google Duplex, it is clear that the use-case specific advances we tout are not the AGI Turing was looking for at all.

Consider the context for Turing’s recommendation of the Turing Test to begin with: Can machines think? How can we know when a machine is intelligent juxtaposed to human intelligence? A programmable assistant to make calls on my behalf, regardless of how human-like it may sound, is in no way capable of handling spontaneous interactions easily handled by us flesh-and-blood folk. Our human scope of spontaneity is infinite, whereas the bot is not. At least not yet.

So what are we to do? When it comes to NLP what comes next? I will examine a few real possibilities in subsequent entries.

Leave a Reply