CODE EX MACHINA

One of my pet peeves is real-time coding tests during job interviews. I find the entire concept to be not only counter-productive but also demeaning and offensive. Some might argue that asking a software developer to write code from some random pre-baked challenge is a valid measure of both skill and pressure-coping abilities. I vehemently disagree. Although programming superstars are to be lauded and highly compensated, in the final analysis, software development is a team sport. The Internet was invented to allow developers to share code and algorithms; websites were an afterthought. The entire concept of open source software presumes access to code for reference and innovation. One might as well employ drunk coding for selection criteria. Or coding while being chased by a barrel of hostile monkeys.

One of my pet peeves is real-time coding tests during job interviews. I find the entire concept to be not only counter-productive but also demeaning and offensive. Some might argue that asking a software developer to write code from some random pre-baked challenge is a valid measure of both skill and pressure-coping abilities. I vehemently disagree. Although programming superstars are to be lauded and highly compensated, in the final analysis, software development is a team sport. The Internet was invented to allow developers to share code and algorithms; websites were an afterthought. The entire concept of open source software presumes access to code for reference and innovation. One might as well employ drunk coding for selection criteria. Or coding while being chased by a barrel of hostile monkeys.

Any organization that would ask a software developer to write code on command without allowing said developer the means and latitude to satisfy the development requirements using references, sources, and tools normally used in software development is like asking a basketball players to try out for a team with no ball, no basket, no hardwood court and no team with whom to interact. “Let me see you dribble without a ball.” It is akin to asking a musician to explain the tools of music composition while composing nothing.

Such a selection process for any sports team or music ensemble would be absurd and pointless. In my view, the same is true of any organization or manager requiring the passing of a real-time coding exam from a potential hire. And yet, there’s an entire industry based on coding challenges as such.

So today I am writing to complain about the absurdity of coding challenges, the litany of code monkeys who inevitably line up, to unquestioningly respond to such challenges, and the advent of code generation from natural language processing (NLP) innovations, which will replace many of those code-challenge monkeys in short order. If you hire software developers based on time-based code challenges wherein you observe each key stroke and don’t provide interaction, discussion or reference possibilities to outside sources during the process you are operating with the mistaken belief that a developer who does very well under such circumstances knows how to code. The fact is, a LITANY of search results from a Google query on the topic should be sufficient to inform if not dissuade one from such an approach — if you can easily find the solution to your coding test via a Google search, chances are, so can your potential new hire.  What does that encourage? The successful code monkey must learn quite well by rote. And if that is what your organization lauds, then you won’t need those code monkeys for long if at all.

What does that encourage? The successful code monkey must learn quite well by rote. And if that is what your organization lauds, then you won’t need those code monkeys for long if at all.

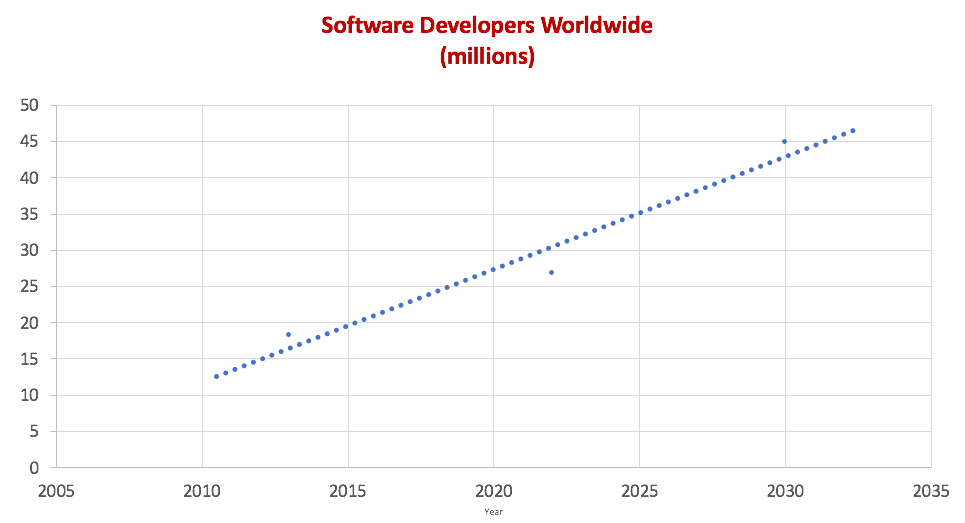

With the number of software developers worldwide tripling between 2010 and 2030 it stands to reason there is a growing queue of coders ready to stand in line for job opportunities. Global human population in 2030 is projected to be roughly 23% greater than it was in 2010. So it also stands to reason that the tripling of the number of software developers over the same period means that a steadily increasing greater share of human brain power is employed in the various tasks around software development. Thus, the rise of the code monkey is also to be expected. Worse than the code monkey is the keeper of a barrel of code monkeys, if the keeper actually views those monkeys as such. How can one tell such a keeper? Requiring the code-on-command test is a really good clue.

But as with so many ML/AI use cases popping up these days, the code-on-command test too will soon fall by the wayside, overtaken by more sensible approaches to probing for technical expertise, or at least the ability to obtain technical expertise. And what of those managers who obstinately continue to employ such tests? Obviously market forces will reduce those so inclined to the dinosaur ashes they were always intended to become; the unwavering power and force of ephemeralization will see to that.

Code ex machina

To bolster my claim that the code-on-command test to determine level of technical expertise is one of the primary destructive forces in the known universe, I point to advances in NLP for support and suggest that code generation by ML/AI models will soon supplant the jungle of code monkeys currently in training. So I suggest an experiment: Find a few of those code challenge questions and ask a machine for a solution.

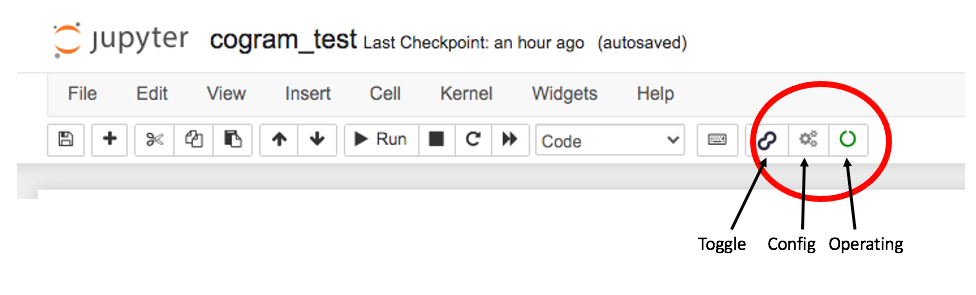

For this experiment, from a field of choices, I picked one rather easily deployed application to generate python code. Python, as you may know, is one of the top choices for ML/AI programming languages and has even eclipsed Java in popularity in recent months. The Cogram plugin for Jupyter Notebook seemed to be the ideal target. After an hour or so of configuration futzing, I did manage to get a notebook fired up with the code completion extension on display.

The Toggle switch is important. If set to the ‘on’ or open position, hitting return after a comment in Python will start the code generation process, which you may not want. The default position is open. So close it on a fresh notebook until you want code. Enter your comment(s), and then set the Cogram Toggle to open, which allows you to then see the Configuration icon as well as the Operating icon. You can configure Creativity and the number of Suggestions or code snippets to generate.

From the HELP inline documentation:

👉 Cogram works best with some context. In an empty Jupyter Notebook, start out with one or two cells where you write code manually, then begin using Cogram.

💡 You can generate code by typing a comment, then waiting for Cogram’s completion:

# create an array containing integers from 1 to 10, and save to file

or by starting a line of code:

def merge_dicts

👉 Cogram suggests code automatically when you stop typing. You can switch to manual code generation, triggered with the Tab key, in the Cogram menu (⚙️).

👉 You can cycle through different code suggestions with the arrow keys.

The hypothesis for my quick experiment is simple: Modern code generation by machine can match the output of humans forced to endure time-boxed coding tests during interviews.

In all fairness, my quick experiment was not meant to be a rigorous determination to prove or disprove the hypothesis, but rather an interesting set of activities from which to derive a blog of complaint to be filed with all the code monkey keepers on the planet. What I discovered during this set of activities was the fine ability of platforms like Cogram to augment programming productivity. I will want to use Cogram going forward as much as I would want other required tools. I think innovations like those Cogram can monetize will be as important as some of the more useful libraries and packages we take for granted today.

Here is but one bit of code generated by the Cogram extension on my notebook:

# Print a fibonacci series using recursion.

def fibonacci(n):

if n == 0:

return 0

elif n == 1:

return 1

else:

return fibonacci(n-1) + fibonacci(n-2)

n = int(input("Enter the number of terms: "))

for i in range(n):

print(fibonacci(i))

Bear in mind, the ONLY line of code I wrote was the comment and then I hit return. Cogram did the rest. Also bear in mind this specific question was taken from the long list of typical coding questions asked in the coding tests I find to be so objectionable. So listen up, code monkeys and keepers: the machines are coming for your jobs. You’ve been warned.

Now, one might ask, “If not coding tests, how then can we determine the coding abilities of a potential hire?” And that’s a fair question. For which I ask a simple question in response: “What specifically are we aiming to measure with such tests?”

Are we looking for the most Pythonic coders? Is that really what we need? Do we value rote memorization skills? Walking Wikipedias? Or is it something else? After all, programming languages come and go. Just a few years ago it was Java. And before Java it was C/C++. And now it’s Python. Tomorrow may very well find Javascript or Go or Julia to be the most in demand. Do we want SMEs in nuances and corner-cases of those specific languages? Or do we want monkeys who can think critically, learn easily, and express solutions using abstract logic and pseudo code?

Another question I would ask is also very simple: In an age when no-code/low-code platforms are receiving the lion’s share of investment, why would we insist on hiring code monkeys? Wouldn’t we be better of with at least a balance of no-code monkeys?

Think Outside the Language

Python is awesome. But it’s not the last stop on this journey. As the rate of technological innovation increases, and the engines of data generation continue exponential bursts of more and more and more, there must be the emergence of new and more succinct and more expressive ideas to reduce human cognitive load. Nowhere is it written that code must be in any language at all. If nothing else the emergence of code generation by machine should teach us that. And coming from NLP? Were we looking for code generation? No! We were looking for some understanding by machine of written human text. Take us a step closer to passing the Turing Test perhaps, but code generation? No! That happy by-product of modern NLP is but one small consequence that was not, to my thinking, intended when when the first steps toward NLP were suggested over a hundred years ago.

I would implore the monkey-house keepers to rethink the use of coding tests and I would encourage the code monkeys to rebel — throw off the chains of time-boxed coding tests. Just say no. Explain why such tests are counter-productive. Think outside the language and emphasize the innate ability we all share to quickly learn and adapt. Isn’t that ability what sets us monkeys apart in the first place?

Please see my github repo for the Jupyter Notebook from this fun experiment. And if you do code and have yet to experiment with Cogram, I strongly suggest kicking those tires.

Leave a Reply